You just ran what you thought was a really promising conversion test. In an effort to raise the number of visitors that convert into demo requests on your product pages, you test an attractive new redesign on one of your pages using a good ol’ A/B test. Half of the people who visit that page see the original product page design, and half see the new, attractive design.

You run the test for an entire month, and as you expected, conversions are up — from 2% to 10%. Boy, do you feel great! You take these results to your boss and advise that, based on your findings, all product pages should be moved over to your redesign. She gives you the go-ahead.

But when you roll out the new design, you notice the number of demo requests goes down. You wonder if it’s seasonality, so you wait a few more months. That’s when you start to notice MRR is decreasing, too. What gives?

Turns out, you didn’t test that page long enough for results to be statistically significant. Because that product page only saw 50 views per day, you would’ve needed to wait until over 150,000 people viewed the page before you could achieve a 95% confidence level — which would take over eight years to accomplish. Because you failed to calculate those numbers correctly, your company is losing business.

A risky business

Miscalculating sample size is just one of the many CRO mistakes marketers make in the CRO space. It’s easy for marketers to trick themselves into thinking they’re improving their marketing, when in fact, they’re leading their business down a dangerous path by basing tests on incomplete research, small sample sizes, and so on.

But remember: The primary goal of CRO is to find the truth. Basing a critical decision on faulty assumptions and tests lacking statistical significance won’t get you there.

To help save you time and overcome that steep learning curve, here are some of the most common mistakes marketers make with conversion rate optimization. As you test and tweak and fine-tune your marketing, keep these mistakes in mind, and keep learning.

6 CRO mistakes you might be making

1) You think of CRO as mostly A/B testing.

Equating A/B testing with CRO is like calling a square a rectangle. While A/B testing is a type of CRO, it’s just one tool of many. A/B testing only covers testing a single variable against another to see which performs better, while CRO includes all manner of testing methodologies, all with the goal of leading your website visitors to take a desired action.

If you think you’re "doing CRO" just by A/B testing everything, you’re not being very smart about your testing. There are plenty of occasions where A/B testing isn’t helpful at all — for example, if your sample size isn’t large enough to collect the proper amount of data. Does the webpage you want to test get only a few hundred visits per month? Then it could take months to round up enough traffic to achieve statistical significance.

If you A/B test a page with low traffic and then decide six weeks down the line that you want to stop the test, then that’s your prerogative — but your test results won’t be based on anything scientific.

A/B testing is a great place to start with your CRO education, but it’s important to educate yourself on many different testing methodologies so you aren’t restricting yourself. For example, if you want to see a major lift in conversions on a webpage in only a few weeks, try making multiple, radical changes instead of testing one variable at a time. Take Weather.com, for example: They changed many different variables on one of their landing pages all at once, including the page design, headline, navigation, and more. The result? A whopping 225% increase in conversions.

2) You don’t provide context for your conversion rates.

When you read that line about the 225% lift in conversions on Weather.com, did you wonder what I meant by "conversions?"

If you did, then you’re thinking like a CRO.

Conversion rates can measure any number of things: purchases, leads, prospects, subscribers, users — it all depends on the goal of the page. Just saying “we saw a huge increase in conversions” doesn’t mean much if you don’t provide people with what the conversion means. In the case of Weather.com, I was referring specifically to trial subscriptions: Weather.com saw a 225% increase in trial subscriptions on that page. Now the meaning of that conversion rate increase is a lot more clear.

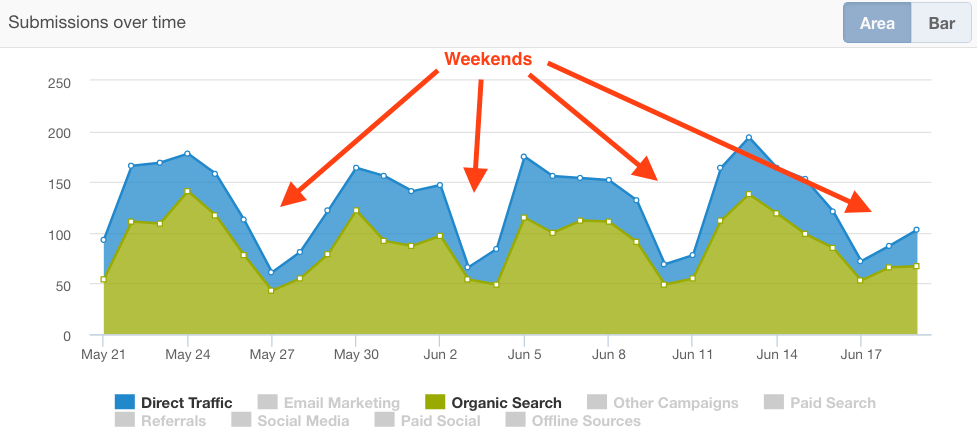

But even stating the metric isn’t telling the whole story. When exactly was that test run? Different days of the week and of the month can yield very different conversion rates.

For that reason, even if your test achieves 98% significance after three days, you still need to run that test for the rest of the full week because of how different conversion rate can be on different days. Same goes for months: Don’t run a test during the holiday-heavy month of December and expect the results to be the same as if you’d run it for the month of March. Seasonality will affect your conversion rate.

Other things that can have a major impact on conversion rate? Device type is one. Visitors might be willing to fill out that longer form on desktop, but are mobile visitors converting at the same rate? Better investigate. Channel is another: Be wary of reporting “average” conversion rates. If some channels have much higher conversion rates than others, you should consider treating the channels differently.

Finally, remember that conversion rate isn’t the most important metric for your business. It’s important that your conversions are leading to revenue for the company. If you made your product free, I’ll bet your conversion rates would skyrocket — but you wouldn’t be making any money, would you? Conversion rate doesn’t always tell you whether your business is doing better than it was. Be careful that you aren’t thinking of conversions in a vacuum so you don’t steer off-course.

3) You don’t really understand the statistics.

One of the biggest mistakes I made when I first started learning CRO was thinking I could rely on what I remembered from my college statistics courses to run conversion tests. Just because you’re running experiments does not make you a scientist.

Statistics is the backbone of CRO, and if you don’t understand it inside and out, then you won’t be able to run proper tests and could seriously derail your marketing efforts.

What if you stop your test too early because you didn’t wait to achieve 98% statistical significance? After all, isn’t 90% good enough?

No, and here’s why: Think of statistical significance like placing a bet. Are you really willing to bet on 90% odds on your test results? Running a test to 90% significance and then declaring a winner is like saying, "I'm 90% sure this is the right design and I'm willing to bet everything on it.” It’s just not good enough.

If you’re in need of a statistics refresh, don’t panic. It’ll take discipline and practice, but it’ll make you into a much better marketer — and it’ll make your testing methodology much, much tighter. Start by reading this Moz post by Craig Bradford, which covers sample size, statistical significance, confidence intervals, and percentage change.

4) You don’t experiment on pages or campaigns that are already doing well.

Just because something is doing well doesn’t mean you should just leave it be. Often, it’s these marketing assets that have the highest potential to perform even better when optimized. Some of our biggest CRO wins here at HubSpot have come from assets that were already performing well.

I’ll give you two examples.

The first comes from a project run by Pam Vaughan on HubSpot’s web strategy team, called “historical optimization.” The project involved updating and republishing old blog posts to generate more traffic and leads.

But this didn’t mean updating just any old blog posts; it meant updating the blog posts that were already the most influential in generating traffic and leads. In her attribution analysis, Pam made two surprising discoveries:

- 76% of our monthly blog views came from "old" posts (in other words, posts published prior to that month).

- 92% of our monthly blog leads also came from "old" posts.

Why? Because these were the blog posts that had slowly built up search authority and were ranking on search engines like Google. They were generating a ton of organic traffic month after month after month.

The goal of the project, then, was to figure out: a) how to get more leads from our high-traffic but low-converting blog posts; and b) how to get more traffic to our high-converting posts. By optimizing these already high-performing posts for traffic and conversions, we more than doubled the number of monthly leads generated by the old posts we've optimized.

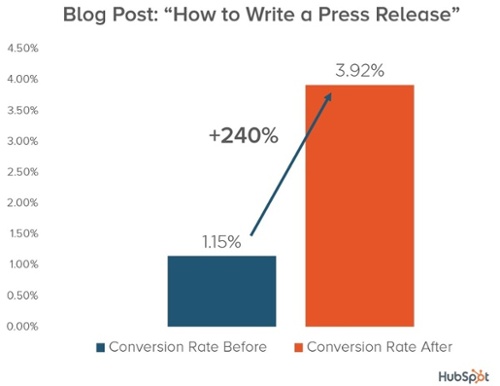

Another example? In the last few weeks, Nick Barrasso from our marketing acquisition team did a leads audit of our blog. He discovered that some of our best-performing blog posts for traffic were actually leading readers to some of our worst-performing offers.

To give a lead conversion lift to 50 of these high-traffic, low-converting posts, Nick conducted a test in which he replaced each post’s primary call-to-action with a call-to-action leading visitors to an offer that was most tightly aligned with the post’s topic and had the highest submission rate. After one week, these posts generated 100% more leads than average.

The bottom line is this: Don’t focus solely on optimizing marketing assets that need the most work. Many times, you’ll find that the lowest-hanging fruit are pages that are already performing well for traffic and/or leads and, when optimized even further, can result in much bigger lifts.

5) You base your CRO tests on tactics instead of research.

When it comes to CRO, process is everything. Remove your ego and assumptions from the equation, stop relying on individual tactics to optimize your marketing, and instead take a systematic approach to CRO.

Your CRO process should always start with research. In fact, conducting research should be the step you spend the most time on. Why? Because the research and analysis you do in this step will lead you to the problems — and it’s only when you know where the problems lie that you can come up with a hypothesis for overcoming them.

Remember that test I just talked about that doubled leads for 50 top HubSpot blog posts in a week? Nick didn’t just wake up one day and realize our high-traffic blog posts might be leading to low-performing offers. He discovered this only by doing hours and hours of research into our lead gen strategy from the blog.

Paddy Moogan wrote a great post on Moz on where to look for data in the research stage. What does your sales process look like, for example? Have you ever reviewed the full funnel? “Try to find where the most common drop-off points are and take a deeper dive into why,” he suggests.

Here’s an (oversimplified) overview of what a CRO process should look like:

- Step 1: Do your research.

- Step 2: Form and validate your hypothesis.

- Step 3: Establish your control, and create a treatment.

- Step 4: Conduct the experiment.

- Step 5: Analyze your experiment data.

- Step 6: Conduct a follow-up experiment.

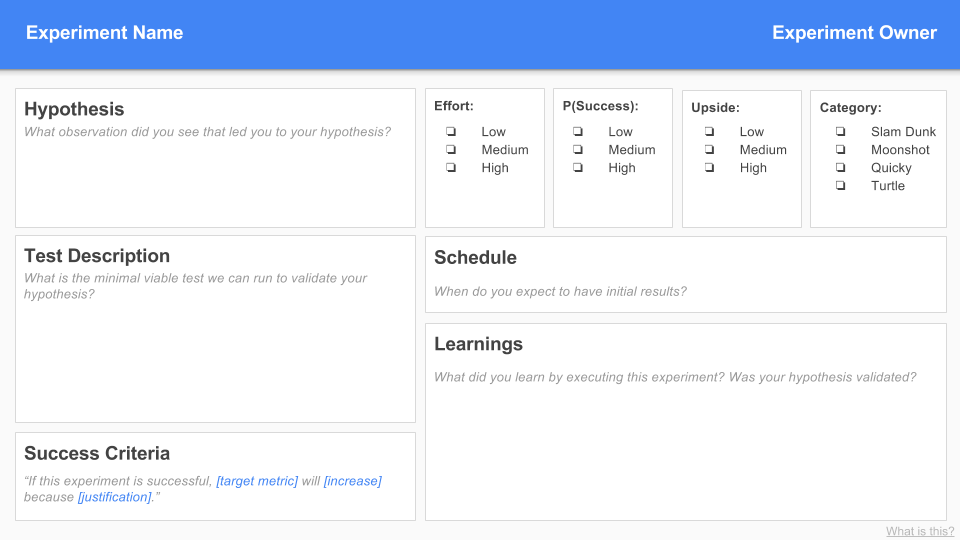

As you go through these steps, be sure you’re recording your hypothesis, test methodology, success criteria, and analysis in a replicable way. My team at HubSpot uses the template below, which was inspired by content from Brian Balfour’s online Reforge Growth programs. We’ve created an editable version in Google Sheets here that you can copy and customize yourself.

Don’t forget the last step in the process: Conduct a follow-up experiment. What can you refine for your next test? How can you make improvements?

6) You give up after a "failed" test.

One of the most important pieces of advice I’ve ever gotten around CRO is this: “A test doesn’t ‘fail’ unless something breaks. You either get the result you want, or you learned something.”

It came from Sam Woods, a growth marketer, CRO, and copywriter at HubSpot, after I used the word “fail” a few too many times after months of unsuccessful tests on a single landing page.

What he taught me was a major part of the CRO mindset: Don’t give up after the first test. (Or the second, or the third.) Instead, approach every test systematically and objectively, putting aside your previous assumptions and any hope that the results would swing one way or the other.

As Peep Laja said, “Genuine CROs are always willing to change their minds.” Learn from tests that didn’t go the way you expected, use them to tweak your hypothesis, and then iterate, iterate, iterate.

I hope this list has inspired you to double down on your CRO skills and take a more systematic approach to your experiments. Mastering conversion rate optimization comes with a steep learning curve — and there’s really no cutting corners. You can save a whole lot of time (and money) by avoiding the mistakes I outlined above.

Have you ever made any of these CRO mistakes? Do you have any CRO mistakes to add to the list? Tell us about your experiences and ideas in the comments.

Great insight! One of the things we do often with clients is explain to them the length of time they would need to run CRO tests is based on the impressions, and not the actual number of hours, days, weeks the test was running.

Making an adjustment because you didn't account for statistical significance and margin of error can really lead to a negative impact on your business.

We often start our CRO tests with qualitative runs via User Testing before pushing forward with a qualitative approach to verify our hypothesis. You can often identify where your ideas won't work as expected before you even need to get thousands of page views to verify CRO improvements.

I've seen the same problem with clients as well. Clients typically expect a fast turnaround on action items and it often takes longer with CRO tests, particularly on sites with an ultra specific audience.

The optimization is often to attend to what your client asks you, and what are the problems that you find when trying to place an order, ask for a quote or simply look for information. Many times we are not able to detect it because as we are always seeing the same web, there are aspects that we go over. Very good post Lindsay and clarification

The optimization of each customer is the best. In my opinion

Very True Lindsay..! When I was new in the SEO industry, I made some mistakes, even i think we all make CRO mistake, but what we can learn from the mistakes, it's important for us. I want to add few most common CRO mistake-

- Select responsive design over mobile first design.

- Not identify or understand the audience.

Thanks.

Beautiful post. I am a aerospace engineer and, believe it or not, we make the same mistakes in our systems engineering. I think the most pervasive mistakes are basing decisions on incomplete or insignificant data or, worse yet, no data at all and just a gut feeling. Data driven engineering (or CRO) isn't practiced as thoroughly as you might expect.

There is a classic social experiment called the Westinghouse effect that speaks to the interaction between the observer and the experiment. In the Westinghouse factory, they ran an experiment to determine if workers were more productive with brighter lights. The increased the lighting and production increased. Then, they decreased the lighting and production increased even more. How could this be? It was observer's paradox. The increase came because people were watching, not because of the change in lighting.

Observer's paradox seems like it would be a factor in CRO in two of the stories you told. First, the decision to make a redesign based on very recent data from a very recent change could have been only measuring the effect of any change on CRO. Your old readers saw a new box and clicked it. Secondly, the manager who was a pet strategy could be only gathering data that supports his pet project. These are the most insidious types data abusers. They have a long list of anecdotal evidence that seems impressive by shear volume, but each element is essentially useless. By the time you use complex statistics to disprove half of his list, the audience has lost interest but not belief in the remain volume of anecdotal evidence.

Thank you for taking the time to write this piece. It's a great piece to keep on hand to challenge the data nayseyers and remind yourself to do a dillegent job.

By definition Optimisation is never ending - thanks for the detailed reminder Lindsay!

Love the detail here in this post! True marketers test...and have reason and math behind it! Great post...thanks for sharing!

Excellent post Lindsay!

It is true that we should not neglect a single point of our business. Put the case of a website that has an incredible design, sleek, modern ... an excellent SEO positioning and SEM campaign with a good budget but your product is the most expensive or not the best in its category. That% conversion will be going down day by day.

Great one all the points you mentioned in the discussion are really great and of you can focus on fixing the errors as mentioned I guess your campaign will rock

Thanks for sharing

Useful insight Lindsay and thank you for the free resource. My key takeaway from your post is to focus on content that is already performing well and improve it. I also wondered if you had any advice for small low traffic sites at an early stage of development, how to go about researching insight on visitors to the site - would doing a survey work best? I can see that A/B testing wouldn’t provide enough statistical information to be significant.

I completely agree when it comes to A/B testing and tracking analytics. Misunderstanding the results and conversion pages can led to big loss in efforts. One needs to carefully test the pages keeping many factors in consideration like leads, click through rates, sales and bounce rate. I remember when the main landing page was updated, it led a decrease in leads and increased bounce rate. Rather than reverting to the previous page, I experimented it with different variations. Though it had an impact on subscriptions, the sales were increased. So, we need to keep testing our pages, it is better to stay updated with what other marketers or entrepreneurs are doing. Test and see if it works for your business as well.

Awesome post! I can use a lot of the points in my daily work!

Interesting your point of view of the article. I from my experience the page that we have to get the conversions, the typical landing page, first I think it has to be optimized from the Seo point of view. It is necessary to think that the users can accede through adwors or organic search.

To get good conversions you have to let some time pass and see how the users act. A good idea from my point of view is that the page identifies who the company is, with its address and all its data to generate trust. Also in the registration process of the form does not include too many fields. Including too many fields is not a good idea as the user tends to leave the page. Unique data for example name, phone, email and camo to make the query. Here is a call to action on this form. Depending on the type of business, for example a lawyer indicate free pre-consultation and also indicate to the user sending the form does not commit to anything.

Also depending on the business there may be seasonality and conversions may go down. For example a store that sells swimsuits, in winter evidently their sales will drop significantly. Just like a beer company, naturally your sales increase in summer on hot days. Tools like Semrush can help us in this regard on the subject of seasonality.

There are times when we believe that things are not doing well and that is not true, it can simply be a matter of seasonality.

Conversion rate optimization is huge, especially if you follow inbound marketing methodology. Great post on this and definitely agree with all 6 CRO mistakes, especially "Giving Up After A Failed Test". CRO takes time and persistence. Love the insight and examples, very well-written Lindsay, and on a critical topic too.

Does anyone have a favorite A/B testing WP plugin?

Great post! Conversion Rate Optimization is my biggest weakness. I found the info really useful. Thanks!

Sounds Good.... Thanks for sharing..

One of the worst Conversion Rate Optimization mistakes that we can make when setting up testing is not evaluating the types of visitors that arrive to our website.

I see A/B testing as important part of SEO, however, it might be a bit difficult to set up the test correctly because of different seasons, long time to rank high and interpreting the results correctly. I've been mostly using VWO but since Google has introduced its own tool, we've switched to that.

When you build an optimization strategy for your company, you'll first align your program’s testing efforts to your company goals. Without a strategic guide, you run the risk of building unfocused or inefficient tests that consume time and resources without producing results.So firts create a goal tree.

A goal tree helps you:

I recommend that you focus your testing efforts on the KPIs at the bottom of the tree. These metrics - like average order quantity - are opportunities to optimize for profitability. Moreover, focusing on smaller pieces rather than the top metric can help you raise the win rate, since experiments are faster to run and more likely to reach statistical significance. The lift you generate rolls directly to the metric at the top.

It’s a good idea to combine your goal tree research with data from your analytics platform. This can help you figure out where to optimize for the biggest impact. Imagine, for example, that the KPI you’re targeting is average order quantity.

Maybe your analytics tell you that certain products are purchased in larger quantities: non-perishable pet food versus expensive televisions, for instance. You may want to test a strategy that promotes those products, by offering a discount on pet food bundles or suggesting certain purchase quantities on the checkout page.

If you see improvement that confirms your hypothesis, consider leveraging this insight through personalization. On your landing page, display a banner that suggests that visitors will build up a winter stock of pet food; show this banner to visitors who've browsed pet food in the past. Match your site experience to SEM campaigns that promote high-value pet food brands. Create humorous pet food campaigns for younger audiences, and value-oriented messaging for discount shoppers.

Goal trees focus your optimization strategy on concrete metrics, so your team’s efforts to design and manage campaigns and experiments make an impact.

Great article, thanks! As a small business owner and DIY SEOer, these types of articles are a huge help!

Good post, Really help to learn about to avoid common CRO mistakes.

i was made an A/B test. A page reduces bounce rate about 5% and increase the time on web about 1 minute and increase the phone calls ( stupid questions ). My B test have a higer bounce rate and reduces time on web but increase the sales without stupid calls ...

A test increase my SEO

B test increase my business

one i start at made A/B test, i can't stop, need made one, an other, an other an other ....

Most common mistakes in CRO project is that it does not receive a lot of traffic, but this is definitely a factor you need to take into consideration. A/B tests to get significant results, or focus your efforts on customer acquisition first and let the CRO project follow once your traffic increases.

Thanks for sharing.. It's a really helpful information provide..Here you provide these mistakes with example it's a really helpful and read it ...

Lindsay, you nailed it! This speaks volumes to my experience. I've been involved in campaigns that had so much potential but because the initial "tactic" "failed" they were scrapped, and the cycle was started again.

If someone asked me the most important phrase you could learn in business it's "there is no such thing as failure, just learning experiences." If you don't live by that, then you're not meant for business.

Hi, thank you for sharing this post, it is very helpful and it explains alot very informative. I would definitely visit here again.

I never even knew that I was making the same CRO mistake over and over before I read this! Thanks for sharing.